Reconfiguring Local AWStats for Azure Web App

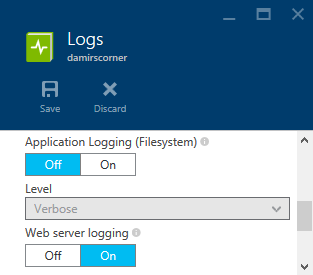

When I moved my blog to Azure, I broke the AWStats statistics, which I had configured, when I was still hosting the blog myself. Almost a month after the move it was about time to get it working again. Of course I was already thinking about it during migration and enabled diagnostics for my web app in Azure, otherwise I would have lost the traffic information for that period. This can easily be done in the All Settings menu for the web app by clicking on the Diagnostic logs menu item. By turning on the Web server logging option, standard IIS logging gets enabled.

The log files are saved to LogFiles/http/RawLogs subfolder on the server. They are automatically deleted after a period of time therefore you will want to download them and store them elsewhere. I chose to use FTP and automate the process with PowerShell.

There are 2 steps involved:

First a list of files in the folder needs to be retrieved:

$credentials = New-Object System.Net.NetworkCredential($ftpUsername, $ftpPwd) [System.Net.FtpWebRequest]$ftp = [System.Net.WebRequest]::Create($ftpServer) $ftp.Credentials = $credentials $ftp.Method = [System.Net.WebRequestMethods+FTP]::ListDirectory $response = $ftp.GetResponse().GetResponseStream(); [System.IO.StreamReader]$reader = New-Object System.IO.StreamReader($response)Then each of the files from the response needs to be downloaded:

$webClient = New-Object System.Net.WebClient $webClient.Credentials = $credentials $localFiles = @() while (($file = $reader.ReadLine()) -ne $null) { $localFile = $file.Substring(7) $localFiles += $localFile $localPath = Join-Path $downloadDir $localFile $webClient.DownloadFile($ftpServer + $file, $localPath) }

Before saving the file locally, I am stripping the first seven characters of the filename to remove the unique prefix and only keep the timestamp part of the name (e.g. this renames 1d66b1-201509260745.log to 201509260745.log). I also store all the downloaded files in a local array to keep a record of the files which still need to be process by AWStats. This can be done by invoking the standard update script for each of the files, with LogFile value overridden accordingly:

$localFiles = $localFiles | sort

for ($i = 0; $i -lt $localFiles.Length - 1; $i++)

{

$localPath = Join-Path $downloadDir $localFiles[$i]

$cmd = "$perlPath $awstatsPath -config=damirscorner -LogFile=$localPath"

Invoke-Expression $cmd

}

Since the downloaded file with the most recent timestamp is still being written to, I skip it after sorting all the filenames to be processed. Once a newer log file will get created, this one won't have the most recent timestamp any more and will get processed as well.

I published the final script as a Gist. All the configuration variables used in the snippets above are defined there; of course with sensitive data removed.